Interactive,  native Platform

native Platform

Interactive,  native Platform

native Platform

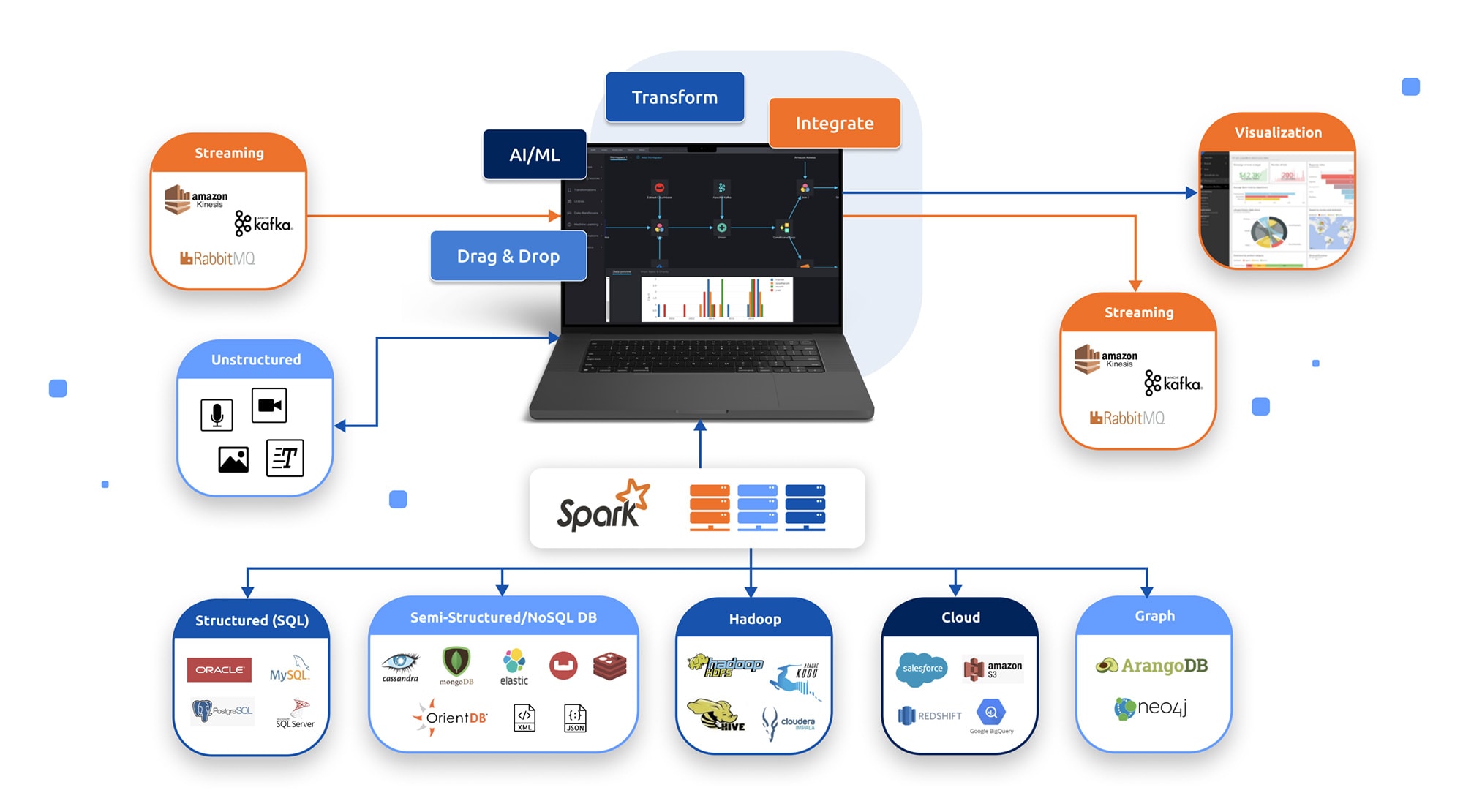

BigBI studio is a fully interactive visual ETL platform that brings the full power of Apache Spark to the hands of BI analysts & data science teams without the need for coding. BigBI is built for Big Data & Lakehouse architecture.

The power of distributed cluster - Volume, performance & scalability

The power of Apache Spark starts with Scalability. Apache Spark performs all analytic calculations on a cluster of servers rather than a single ETL server with up to 100X performance & scalability.

The power of Apache Spark starts with scalability. Apache Spark performs all analytic calculations on a cluster of servers rather than a single ETL server, which enables high parallelism of the analytic computations as well as optimization of data flows. Even in the context of distributed cluster, Apache Spark has been found more than 100x faster than previous computation paradigms, as the Spark processing is utilizing the joint memory space & computation power of all the machines within the Spark cluster for in-memory calculations, with efficient algorithms while saving unnecessary reads & writes to disk.

Distributed cluster

Strong Interactive pipeline building and debugging

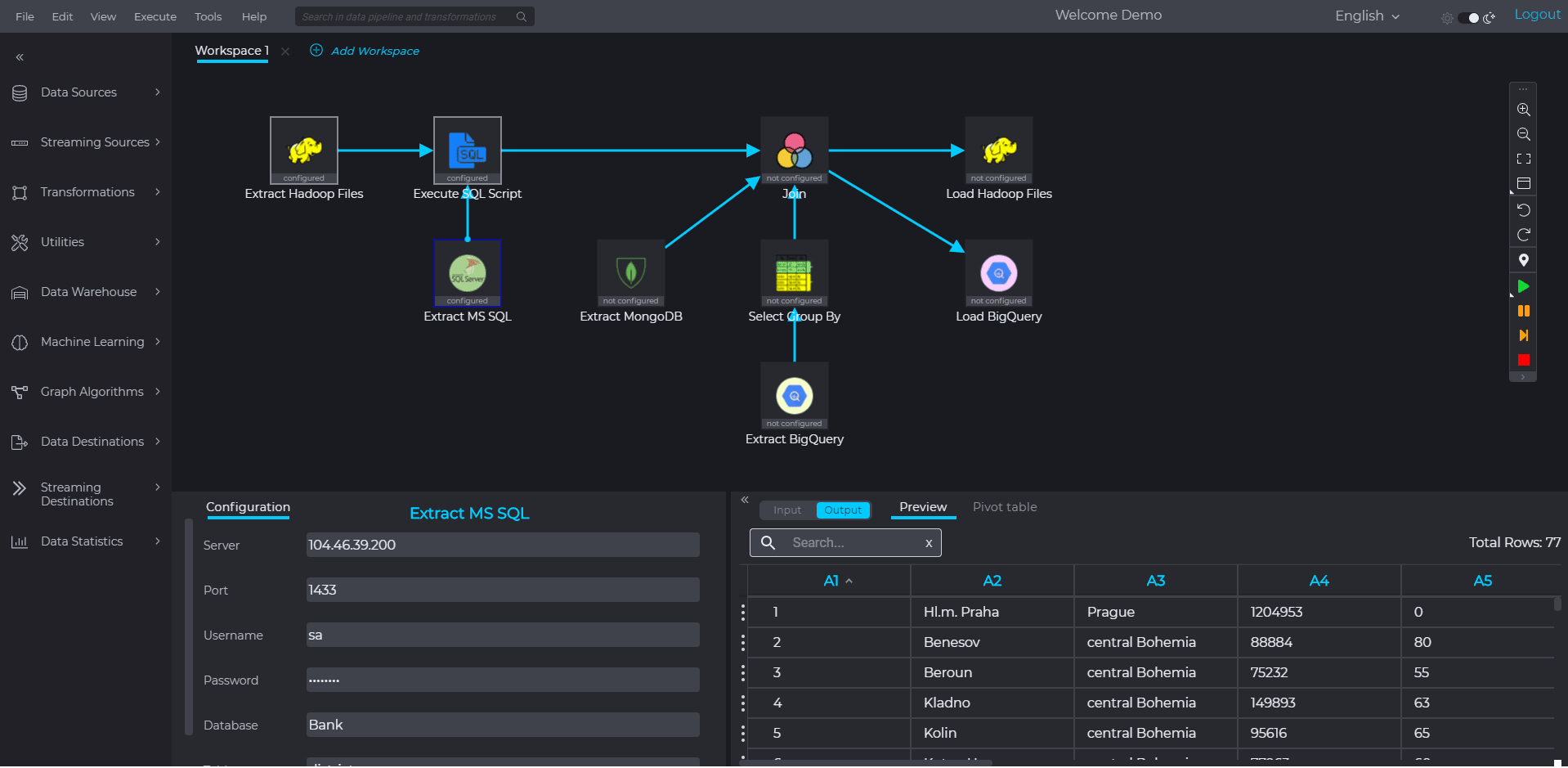

BigBI’s studio visual ETL has been natively built for the Apache Spark platform, meaning any visual operation on BigBI studio would be performed, interactively on the Spark cluster.

Pipelines are built with simple drag & drop operations and set by easy-to-use wizards.

Data samples can be examined directly from the Spark cluster, after each step of execution in the data pipeline. Data samples can be examined in alphanumeric and pivot graph form.

BigBI’s studio visual ETL has been natively built for the Apache Spark platform, meaning any visual operation on BigBI studio would be performed, interactively on the Spark cluster.

Pipelines are built with a simple drag & drop operations and set by easy-to-use wizards.

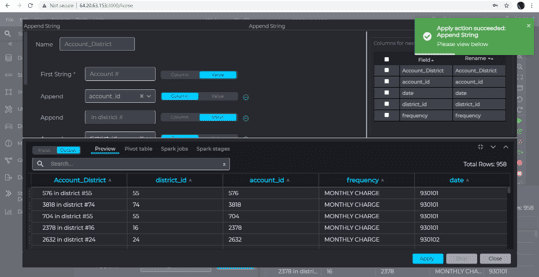

All data sources, transformations & data destinations are placed on canvas and connected to the adjacent steps by drag & click.

Additionally all data sources, transformations and data destinations could be easily configured by easy-to use data wizards. The green success message shows successful configuration and execution, while orange error messages explains what needs to be corrected.

Define by wizard

Define by wizard

Examine data samples per step

While building the Big data pipeline, the user can see data samples from the various data sources and how such data samples would look like after execution of each step in the data pipeline- in real time from the Spark cluster. This interactive examination of the sample processing expediates the pipeline development process, reduces errors, and strengthens the confidence at the actual results of such pipeline – saving time and effort in pipeline development.

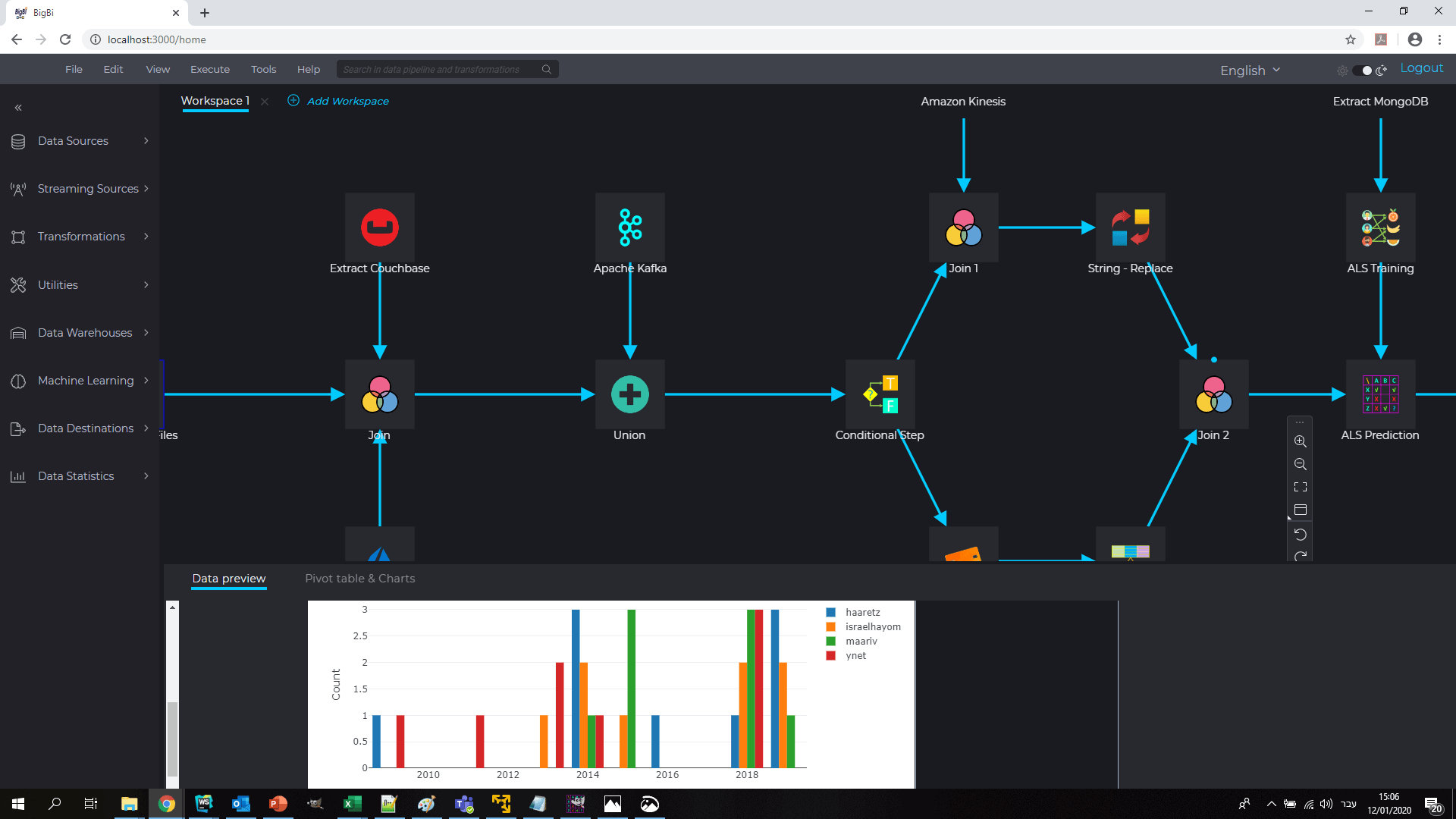

Interim results could be examined both in a textual form as well as graph visualization. A pivot graph function enables the analyst to examine different relationships between data fields (directly from the Spark cluster) to get comfortable with the results.

Visualizing & pivoting interim results

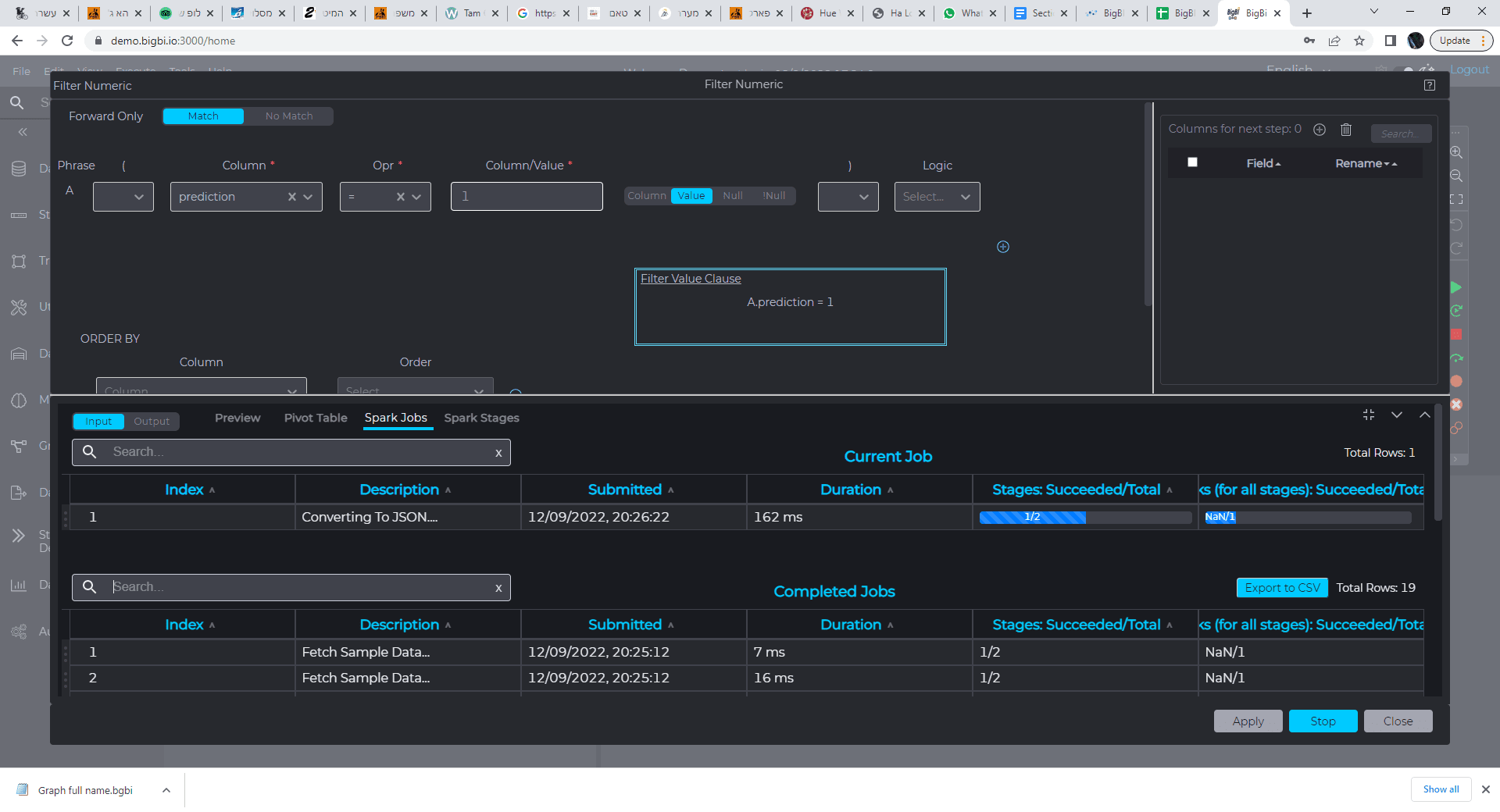

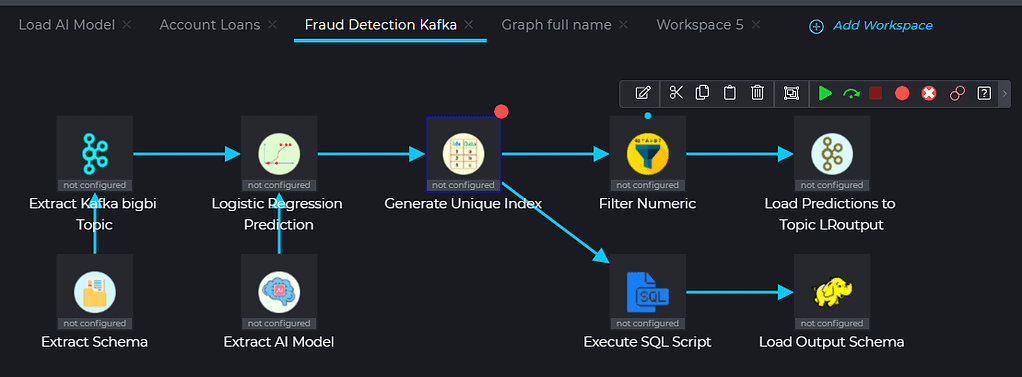

Full interactive debugging for the data pipelines including breakpoints, resume etc., directly on the Spark cluster.

Interactive debugging toolbar enables the running of the developed pipeline on the full data set (not just samples) and viewing both final & interim results.

Breakpoint (red dots) could be set along the pipeline to stop the pipeline running for examination of interim results, than resume running, etc.

The user can monitor the advancement and health of the relevant Spark jobs (light blue) in real time.

Only a Spark native visual ETL can give the user the interactive real time view of the data voyage through the pipeline, enabling QA and the level of confidence we’d like to have while processing our big & modern data.

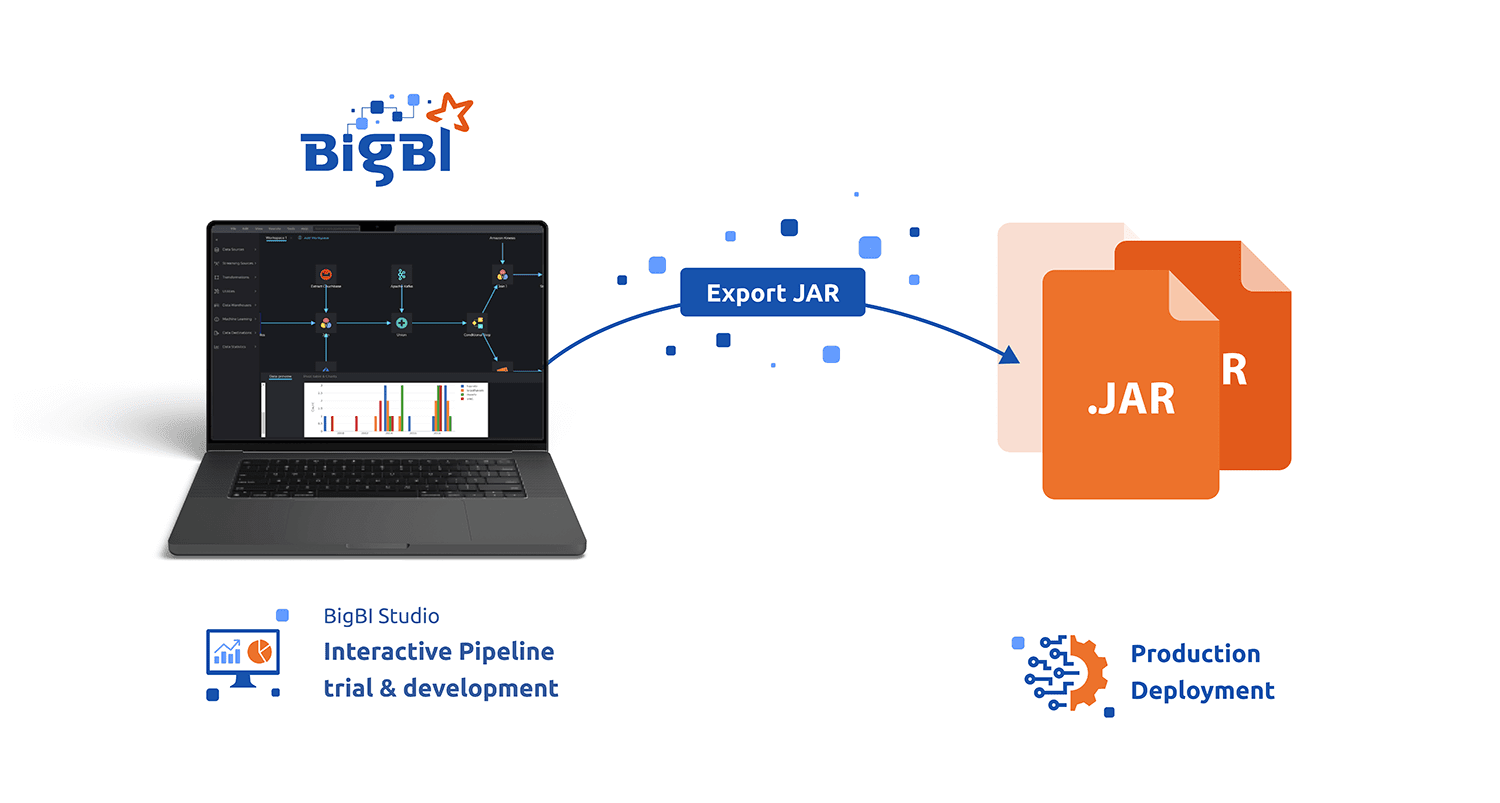

Automatic JAR production for pipeline deployment

In addition to interactive pipeline building, BigBI can generate a compiled JAR version once the development is completed. The JAR could be later used within the organization’s orchestration solution, such as AirFlow or NiFi, or being connected to the CI/CD process.

BigBI studio is interactive in R&D time for sample processing & debugging.

After initial development analyst could run the data pipeline interactively on the full data set, using the “Run pipeline” command.

However, many data pipelines need to be deployed as part of the enterprise IT in order to run constantly, periodically or when certain triggers are met.

Therefore, BigBI created the “Export JAR” command that enables encapsulation of the created Spark-based data pipeline as a Scala grade compiled JAR file, to be deployed within the enterprise orchestration method of choice including Apache AirFlow, Apache NiFi, or even simpler ones (such as Crontab).

Development based organizations could integrate the JAR into their systems using their CI/CD process & tools.

Any format to any format big data integration platform

With the full data integration power of Apache Spark, BigBI can process all data sources and destinations- Structured, semi-structured and unstructured data including:

With the full data integration power of Apache Spark, BigBI can process all data sources and destinations- Structured, semi-structured, and unstructured data including:

- SQL databases

- NoSQL databases

- Elastic search

- Files in traditional formats, such as CSV or PDF as well as JSON, XML, Parquet or Avro.

- Hadoop, whether in HDFS or through its database interfaces (HIVE , Impala etc.)

- Cloud data sources

Streaming & static data integration

Streaming interfaces can also be used to fit the data pipeline within event-driven microservices’ enterprise architecture.

Streaming interfaces can also be used to fit the data pipeline within event-driven microservices’ enterprise architecture.

The data could be integrated, cleansed & aggregated to fuel real time visualization dashboard, enrich any data repository, or to be converted and saved in any format.

Processed results (such as real time alerts & recommendations) could be similarly streamed out to feed downstream systems and modules.

Any format modern data integration

The diagram contains a sample of the available data sources /destinations. The entire set of Spark data sources and destinations available.

*Logos are representations of integration options

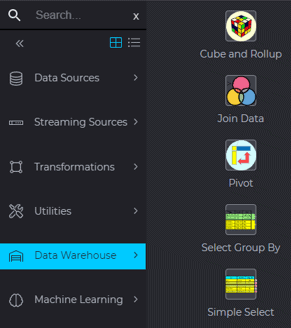

Rich set of data processing operators & algorithms

Harnessing the full power of Apache Spark for visual no-code big data processing, BigBI offers one of the richest sets of operators and transformations for big & modern data integration including:

Harnessing the full power of Apache Spark for visual no-code big data processing, BigBI offers one of the richest sets of operators and transformations for big & modern data integration including:

Rich set of data sources & destinations

Streaming sources & destinations

Transformations for numerical, logical & string calculations, row & column based.

Self defined transformations (in SQL, R or Python).

Time series enablement using state machine operator

Data warehouse operators including cube & rollup

Machine learning enablement based on Spark MLlib including groups of operators related to classification & regression, feature transformers, feature extractors, feature selectors & ML statistics

Graph & graph algorithms operators

Security & privacy utilities such as encryption/decryption, public key management, column hash & masking

As Apache Spark is a vivid open-source environment more and more Spark capabilities could be packaged into BigBI studio, including features of future Spark versions.

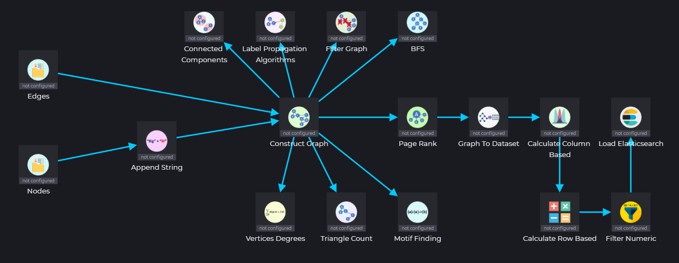

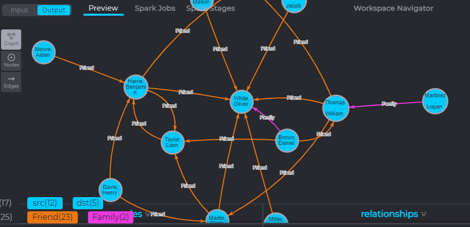

Spark GraphX- building and analyzing graphs as part of the big data pipeline

Graph & Graph algorithms are strong paradigm for link analysis which is being used in many vertical applications such as social influence analysis, fraud investigation, cyber security, homeland security, transportation or resource allocation & optimization.

BigBI enables building and analysis of graphs as part of the big data pipeline and with the computation powers of the Spark cluster.

Graph & Graph algorithms are strong paradigm for link analysis which is being used in many vertical applications such as social influence analysis, fraud investigation, cyber security, homeland security, transportation or resource allocation & optimization.

BigBI implements Apache Spark GraphX to build, analyze & visualize Graph data paradigm.

Graphs can be built as part of the data pipeline as an intersection of 2 tables (nodes & edges), and analyzed with a rich set of graph algorithms (for example, shortest path or page rank). The Graph could be later saved into any of the supported persistent data repository formats for future inquiry or dismantled to data sets.

Building and analyzing a graph as a part of the data pipeline

The graph calculated attributes (such as the page rank of an entity- measuring its relative influence in the graph) could later be attached to specific data entities as enrichment and further analyzed by the data pipe logic including machine learning algorithms.

Machine learning and pipeline deployment

Big data analytics often requires machine learning and AI for smart data cleansing, smart data aggregation or enrichment. In this case, data science teams could help BI analysts by recommending the right algorithms and parameters, while BI teams could help data scientists by preparing data for the data scientist operations.

Big data analytics often requires machine learning and for smart data cleansing, smart data aggregation or enrichment. In this case, data science teams could help BI analysts by recommending the right algorithms and parameters, while BI teams could help data scientists by preparing data for the data scientist operations.

With BigBI:

1. ML algorithms & feature preparation operators that are needed for ML could be easily incorporated within the data pipeline with BigBI rich ML operators’ set.

2. Data scientist-defined Python/R algorithms can be included with the “Execute Python” or “Execute R” operators.

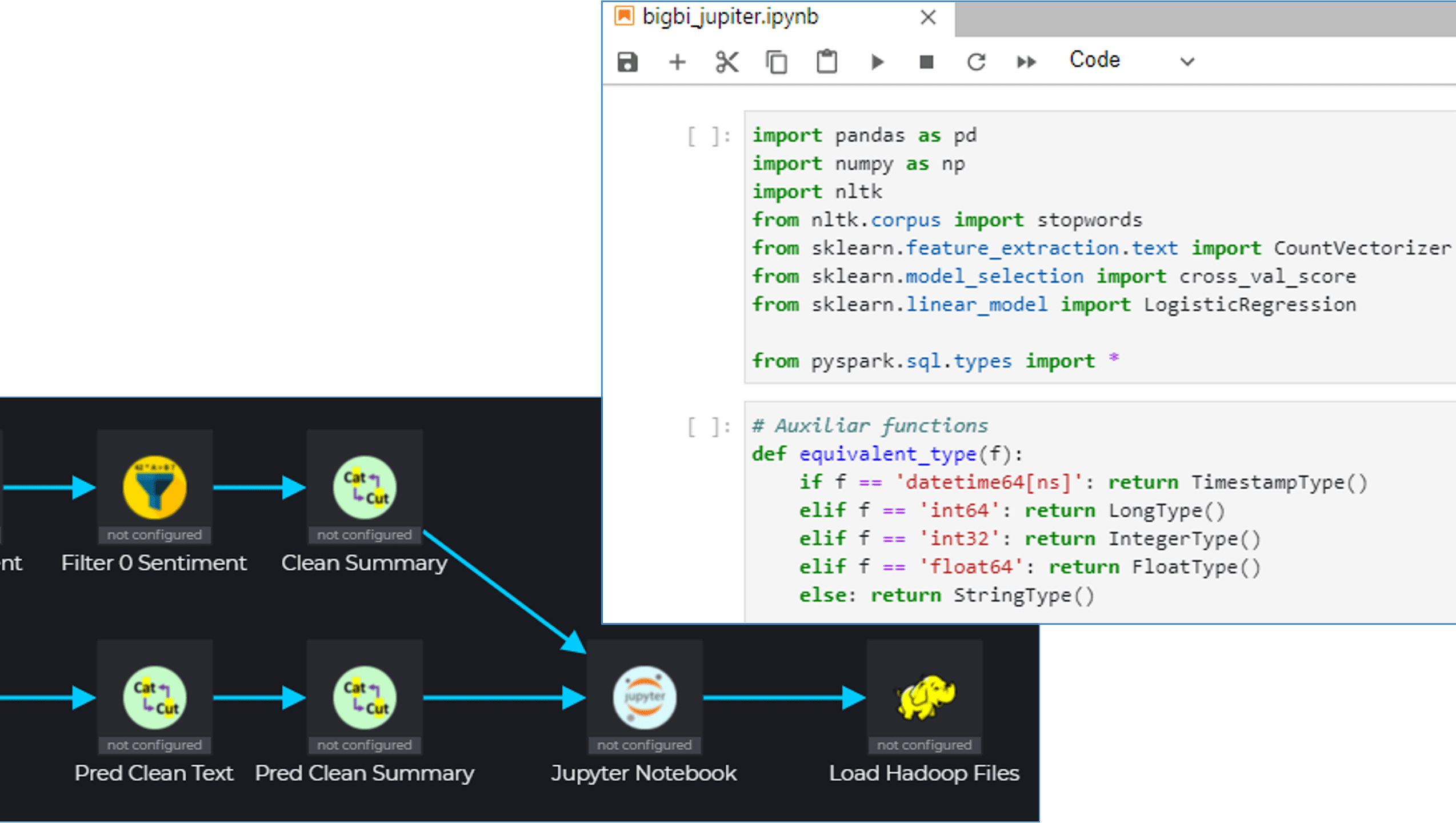

3. Jupyter notebook can be connected to the data pipeline so data scientists can pre-process feature preparation.

This tight integration:

- Simplifies data preparation for the data scientist

- Streamlines integration between data science & BI teams

- Generates one-click pipeline deployment- where the full data pipeline including the ML algorithm in Python could be deployed within a click to production

- Creates efficient processing where feature preparation is running on the Spark Scala generated automatically, while only the ML algorithm is running in Python, simplifying and expediting the deployment of the ML pipeline.